Rough Transformers: Lightweight Continuous-Time Modeling | TickerTrends.io Research Report

The TickerTrends Social Arbitrage Hedge Fund is currently accepting capital. If you are interested in learning more send us an email admin@tickertrends.io .

Abstract:

Time-series data in real-world settings often have long-range dependencies and irregular intervals, challenging traditional sequence-based recurrent models. Researchers use Neural ODE-based models for irregular sampling and Transformer architectures for long-range dependencies, but both are computationally expensive. The Rough Transformer addresses this by operating on continuous-time data with lower computational costs. It introduces multi-view signature attention, which enhances vanilla attention using path signatures to capture both local and global dependencies. This model outperforms traditional attention models and offers the benefits of Neural ODEs with significantly reduced computational resources.

Introduction

Traditional Transformers, originally designed for natural language processing, have been adapted for time series tasks due to their powerful attention mechanism, which can capture long-range dependencies in data. They tend to have many useful applications in: Forecasting, Anomaly Detection, Classification, Imputation, and Representation Learning.

The paper introduces an innovative model called the Rough Transformer, designed to handle continuous-time data more effectively than traditional machine learning models. This model is particularly significant for fields that deal with data sequences that do not have uniform time intervals, such as finance and healthcare.

Continuous-Time Data Challenges

Traditional models, including standard Transformers, are tailored for evenly spaced data, like text or image data. These models face difficulties with continuous-time data, where time intervals between observations vary. For example, in financial markets, transaction times are irregular, and in healthcare, patient data is recorded at different intervals. This irregularity poses a challenge for conventional models to capture the underlying patterns accurately.

Path Signatures Explained

Path signatures provide a mathematical framework to summarize the essential features of a data sequence. They transform the data into a form that is invariant to the timing of the observations. This means that whether the data points are close together or far apart in time, the path signatures capture the same underlying information. By incorporating path signatures, the Rough Transformer can handle the complexity of continuous-time data, capturing both local and global patterns effectively.

Multi-View Signature Attention

The Rough Transformer introduces a multi-view signature attention mechanism, a novel feature that allows the model to focus on both short-term and long-term dependencies within the data simultaneously. Traditional attention mechanisms in models like the standard Transformer often struggle to balance local detail and global context. The multi-view signature attention addresses this by using multiple perspectives to analyze the data, enhancing the model’s ability to understand intricate temporal relationships.

Results (with Examples)

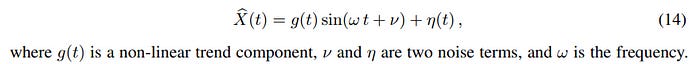

Frequency Classification: Synthetically generated sinusoidal dataset from equation below

Time-to-cancellation of limit orders:

The study examines the time-to-cancellation of limit orders using limit order book data for the AMZN ticker over a single trading day. The analysis utilizes context windows of 1000 and 20000 time-steps to predict order cancellations.

The paper finds the proposed Rough Transformer to have the most optimal RMSE (Root Mean Squared Error).

HR Dataset:

This dataset consists of time-series sampled from patient ECG readings, and each model is tasked with forecasting the patient’s heart rate (HR) at the sample’s conclusion. Once again, the most optimal prediction model was the Rough Transformer.

Computational Efficiency

A significant advantage of the Rough Transformer is its computational efficiency. Unlike Neural ODE-based models, which require substantial computational resources, the Rough Transformer is lightweight. It reduces the computational burden and memory usage, making it faster and more practical for large datasets. This is due to the Path Signature transformation of the data as explained above. This efficiency is particularly valuable in real-world applications where processing speed and resource constraints are critical considerations.

Superior Performance & Real-World Applications

The Rough Transformer has demonstrated superior performance across various time-series tasks. Its ability to manage continuous-time data more effectively than traditional models translates into better predictive accuracy and robustness. The results bear out the prediction accuracy and computational efficiency. In finance, it can be used for high-frequency trading, risk management, and market analysis, where transaction times are irregular. In healthcare, it can improve patient monitoring, disease progression modeling, and personalized treatment planning, dealing with data recorded at varying intervals. Other fields like climatology, transportation, and IoT can also benefit from its capabilities in managing continuous-time data.

Conclusion

The Rough Transformer represents a significant advancement in continuous-time sequence modeling. It builds on the foundational principles of the Transformer architecture but innovates by integrating path signatures and the multi-view signature attention mechanism. Path signatures encode the data’s path in a manner that captures its geometric properties, while the multi-view attention mechanism processes these encoded paths from multiple perspectives, allowing the model to learn from both fine-grained details and overarching trends. By effectively handling irregularly spaced data through path signatures and multi-view signature attention, it offers a robust, efficient, and high-performing solution for various applications. This model opens up new possibilities for leveraging continuous-time data in fields that require precise and computationally efficient analysis.

Hedge Fund Enquiries: admin@tickertrends.io

Follow Us On Twitter: https://twitter.com/tickerplus